Fiscal Year 2007 Accomplishments

SciDAC Visualization and Analytics Center for Enabling Technologies FY07 Accomplishments

SciDAC Institute for Ultra-scale Visualization FY07 Accomplishments

SciDAC Scientific Data Management Center FY07 Accomplishments

High Performance, Petascale Visualization and Analytics FY07 Accomplishments

SciDAC Visualization and Analytics Center for Enabling Technologies FY07 Accomplishments

1. New capability -- production-quality, Petascale capable software infrastructure for visual data analysis deployed at DOE Open Computing facilities. This new capability aims to provide a new class of visual data understanding technologies to the SciDAC, Incite and other researchers at DOE's Open Computing facilities. This capability provides the ability to load and analyze today's massive datasets being produced at DOE's computing facilities, with visual results transmitted over the Internet to the remotely located researcher. This software, which is Open Source, is capable of running on today's largest (and smallest) computing platforms, and is in use today by most SciDAC and Incite projects for production visual data analysis.

2. New capability: production-quality, petascale capable Adaptive Mesh Refinement visual data analysis deployed at DOE Open Computing facilities. In previous years, science researchers who use the Adaptive Mesh Refinement technique for grid generation and simulation have been left with no choice but to fund research and development of visual data analysis software out of their own budgets due to a dearth of support for AMR visual data analysis in production tools. VACET has delivered new capabilities to this community in the form of Open Source, production quality, petascale-capable visual data analysis software that is deployed at DOE's open computing facilities. In addition to numerous scientific and technical benefits, this accomplishment has a pronounced economic benefit: those teams who take advantage of the software will no longer be forced to allocate a portion of their research dollars towards producing software infrastructure for visual data analysis. This cost savings for one group is multiplied across many groups within SciDAC, Incite and elsewhere who use the AMR technique for increasing effective simulation.

3. Multivariate visual data analysis capability. As simulations increase in resolution and fidelity with increases in computational power, they generate datasets that are larger in size and that contain many more variables representing results of calculations in physics and chemistry. Our team has implemented several interrelated techniques that allowing researchers to gain understanding in to complex physical phenomena in large, time varying multivariate datasets produced by today's simulations in SciDAC, INCITE, etc. While some of these techniques are early research prototypes, many are presently included in a production quality, petascale capable visual data analysis software infrastructure deployed at DOE's open computing facilities. These techniques have been proven to be effective for use in the fields of combustion, climate, fusion, astrophysics and accelerator modeling.

For more information, clink on this link www.vacet.org.

SciDAC Institute for Ultra-scale Visualization FY07 Accomplishments

1. Research:

PI: Kwan-Liu Ma

Collaborators: Stephane Ethier, PPPL; Wei-Li Lee, PPPL

Title: An Integrated Exploration Approach to Particle Data Analysis (A submission to IEEE CiSE summarizing this work is presently under review.)

Objective: Achieve interactive visualization and exploration of large time-varying multivariate particle data.

SciDAC Relevance:

Particle simulations are powerful tools for understanding the complex phenomena associated with many areas of physics research, including confined plasma and high energy particle beams. Analyzing the data produced by the simulations, however, presents a challenge due to the large quantity of particles, variables, and time steps. In this project, we have developed a data exploration system that visualizes time-varying, multivariate point-based data from gyrokinetic particle simulations. By utilizing two modes of interaction, physical space and variable space, the system allows scientists to explore collections of densely packed particles and discover interesting features within the data. From the results of this system, scientists at Princeton

Plasma Physics Laboratory have been able to easily identify features of interest, such as the location and motion of particles that become trapped in turbulent plasma flow.

2. Research:

PIs: Kwan-Liu Ma and Robert Ross

Collaborators: Paul Fischer, ANL; Mike Papka, ANL

Title: In-Situ Data Reduction and Visualization for Large Scale 3D Simulations (An SC07 submission summarizing this work is presently under review.)

Objective: Investigate the feasibility of In-Situ visualization for petascale/exascale simulations SciDAC Relevance: Almost all the scientists who perform large-scale simulations use post-processing or co-processing data analysis. They usually dump as much raw simulation data as the storage capacity allows, and use a separate parallel computer to prepare the data for subsequent analysis and visualization. A naive realization of this strategy not only limits the amount of data that can be saved, but also turns I/O into a performance bottleneck when a large number of processors are used. In this project, we study simulation-time data reduction and preparation strategies coupled with appropriate parallel I/O support. Our goal is to significantly decrease both post-processing and I/O costs by using only a small fraction of supercomputing time to better prepare the data for visualization and analysis tasks. Our work thus provides new options for accelerating the overall process of scientific supercomputing and discovery. Such in-situ visualization could become the most plausible approach to petascale/exascale data visualization.

3. Research:

PI: Jian Huang

Collaborators: David Erickson, ORNL; John Drake, ORNL

Title: Uncertainty Visualization of Climate Simulations

Objective: Incorporate uncertainty in the analysis of time-varying multivariate data

SciDAC Relevance:

To understand the inner workings and complexity of climate change is a grand challenge. A key enabling factor to this scientific mission is a visualization and computational tool to study the relationships among the many different time-varying variables in the large scale simulations.

Teaming with climate scientists, we have already produced a prototype system allowing interactive study of time-varying multivariate climate simulation on the Powerwall display system (Everest) at ORNL. This system provides an unprecedented ability for a scientist to engage in hypotheses-driven exploration of the datasets, with (i) an ability to varying parameters in a hypothesis in real-time, and to (ii) automatically sample a fuzzy range of parameters in a hypothesis, with real-time visual feedbacks. The system allows an urgently needed intuitive and highly controllable way to study uncertainty aspects in identifying, verifying and evaluating trends in simulated climate datasets.

4. Research:

PI: Han-Wei Shen

Collaborator: Ross Toedte, Oak Ridge National Laboratory

Title: A User Interface for Large Scale Data Visualization (IEEE Transactions on Visualization and Computer Graphics, Vol. 13, No. 1)

Objective: Develop a new interaction approach to large data visualization

SciDAC Relevance:

When visualizing very large scale data generated by typical SciDAC applications, one challenging task is to select the data at an appropriate resolution so that the visualization can be generated interactively, while the amount of information conveyed through the visualization remains high. We developed an interface using the concept of Entropy from information theory to classify the information content at different levels of detail from a large scale data set.

From the user interface, the user can clearly see where, in the domain, a high resolution copy of data is needed in order not to miss the feature, while in other regions low resolution data can be used to accelerate the calculations.

5. Research:

PI: Kwan-Liu Ma

Collaborators: Tony Mezzacappa, ORNL; John Blondin, NCSU

Title: Parallel Visualization of Large Time-Varying 3D Vector Fields (An SC07 submission summarizing this work is presently under review.)

Objective: Enable scalable, interactive visualization of large time-varying 3D vector fields using a parallel computer.

SciDAC Relevance:

For most of the SciDAC applications, the ability to visualize and interpret time-varying 3D vector fields is crucial to understanding the modeled complex phenomena. In the past, time-varying 3D vector fields, however, are either not looked at directly or looked at in a reduced space. This research project introduces the first scalable parallel algorithm for visualizing time-varying 3D vector fields to their full extent. This new capability already produced pictures that were previously unseen revealing tremendous details and intrinsic structure of some vector fields.

6. Outreach:

PI: Kwan-Liu Ma

Title: SC06 Workshop on Ultra-scale Visualization held on November 13, 2006.

The output from the massively parallel scientific simulations is so voluminous and complex that advanced visualization technologies are necessary to interpret the calculated results. Even though visualization technology has progressed significantly in recent years, we are barely capable of visualizing and analyzing terascale data to its full extent, and petascale datasets are on the horizon. This workshop aimed at addressing this pressing issue by fostering communication between visualization researchers and practitioners. The workshop attendees were introduced to the latest and greatest research innovations in large data visualization. Through the open Q&A sessions, the attendees also had the chance to help direct further research directions.

This one-day workshop was co-located with SC 2006. The Workshop was composed of twelve invited talks. The scope of the Workshop included topics on algorithms, systems, tools, and application driven solutions. The workshop was very well attended and was very successful.

7. Outreach:

PI: Kwan-Liu Ma

Title: SC07 Workshop on Ultrascale Visualization Proposal (submitted to the Supercomputing 2007 Conference on April 13th)

Objective: This workshop aims at disseminating the latest and greatest research innovations in large data visualization to the SC community and fostering greater dialogue among researchers facing common challenges. Potential Impact: The annual SC Conferences gathers computational scientists and HPC practitioners. It is the best venue to disseminate IUSV's research results and findings. The same workshop held at SC06 had 12 invited talks, given mostly by SciDAC researchers. The Workshop attracted a full attendance and created a new channel for research exchange. Continuing the success, this 2nd workshop will encourage participation from outside SciDAC, such as those NSF projects relevant to SciDAC's mission, to foster greater interaction and unite related efforts.

8. Outreach:

PI: John Owens

ACM SIGGRAPH 2007 course

ACM/IEEE SC 2007 course

Title: High Performance Computing on GPUs with CUDA

Objective: Educate the scientific computing community how to capitalize the power of new generation of GPUs in scientific computing and visualization

SciDAC Relevance:

"NVIDIA’s Compute Unified Driver Architecture (CUDA) platform is a co-designed hardware and software stack that expands the GPU beyond a graphics processor to a general-purpose parallel coprocessor with tremendous computational horsepower, and makes that horsepower accessible in a familiar environment – the C programming language. In this tutorial, NVIDIA engineers will partner with academic and industrial researchers to present CUDA and discuss its advanced use for science and engineering domains. Together with multi-core platforms, computing with GPU acceleration is anticipated to revolutionize next generation scientific computing and visualization.

9. Outreach:

PI: Kwan-Liu Ma

"Meeting the Scientsts" Panel Proposal

(submitted to the Visualization 2007 Conference on May 31st)

Objective: Increase the interaction between SciDAC and the Visualization communities, and invite more visualization researchers to work on SciDAC applications. Potential Impact: The attendees of annual Visualization Conferences are mainly researchers and practitioners in the field of visualization. Over the past few years, it has been a continuing goal of the Visualization Conference organizers to bring domain scientists to the Conferences, but there was little success due to the cost. Through SciDAC IUSV's outreach effort, domain scientists are invited to present their applications and visualization needs to the greater visualization community. This direct interaction will potentially lead to new collaborations and research activities, whose outcome could in return greatly benefit the SciDAC community.

_____________________________________

Publications

Title: Visualizing Multivariate Volume Data From Turbulent Combustion Simulations

Authors: Hiroshi Akiba, Kwan-Liu Ma, Jacqueline Chen, and Evatt Hawkes

In: IEEE CS and the AIP, Computing in Science & Engineering, March/April 2007, 76 – 83.

Qishi Wu, Jinzhu Gao, Mengxia Zhu, Nageswara Rao, Jian Huang, and S. Sitharama Iyengar. “Self-Adaptive Pipeline Configuration for Remote Visualization,” accepted (in press), IEEE Transactions on Computer.

Shubhabrata Sengupta, Mark Harris, Yao Zhang, and John Owens. “Scan Primities for GPU Computing. In Graphics Hardware 2007, pages 97-106.

For more information, click here http://vis.cs.ucdavis.edu/IUSV/

SciDAC Scientific Data Management Center FY07 Accomplishments

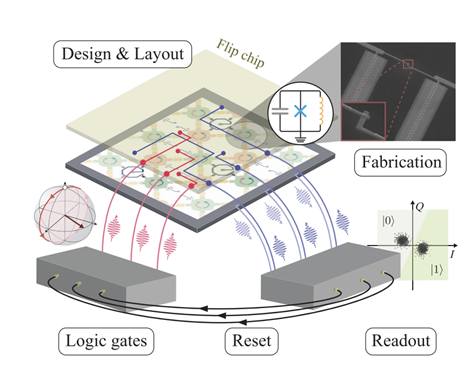

1. The Kepler Scientific Workflow System Used for Complex Fusion Simulation A practical bottleneck for more effective use of available computational and data resources is the the automation of the simulation process. Kepler provides solutions and products for effective modeling, design and execution of scientific workflows. Kepler is a multi-site open source effort, co-founded by the SDM center. Recently, we have applied Kepler technology to the Center for Plasma Edge Simulation (CPES) fusion project. CPES is a Fusion Simulation Project whose aim is to develop a novel integrated plasma edge simulation framework. The simulation requires the automation of coupling two complex codes, called XGC-0 and M3D codes. Shown in the figure 1 below is a workflow that transfers data regularly from the machine with over 4000 processors that runs XGC-0 to another smaller machine for equilibrium and linear stability computations. If the linear stability test fails, the workflow system stops the XGC-0 code, and a job is submitted to perform nonlinear parallel M3D-MPP computation. Based on the results, XGC-0 can be restarted using the updated data from the nonlinear computation. This offers a great advantage of avoiding wasting compute resources as soon as they are found to be unstable. In addition, the workflow system eliminates the need for human monitoring and coordination of task submissions. Another part of the workflow generates intermediate images and status report on the progress of the workflow process. Such automation of simulation processes is essential as the simulations are scaled to larger and larger machines with many of thousands of cores.

Figure 1. Code coupling workflow steers computations automatically when computations are found unstable, eliminating waste of computational resources

2. Feature Extraction and Tracking Technology Applied to Identify Fusion Plasma Behavior

The SDM center is developing scalable algorithms for the interactive exploration of large, complex, multi-dimensional scientific data. By applying and extending ideas from data mining, image and video processing, statistics, and pattern recognition, we are developing a new generation of computational tools and techniques that are being used to improve the way in which scientists extract useful information from data. Recently, these technologies are being applied to identifying the movement of “blobs” in images form fusion experiments. A blob is a coherent structure in the image that carries heat and energy from the center of the torus to the wall. The figure below shows bright blobs extracted from experimental images from the National Spherical Torus Experiment (NSTX). The blobs are high energy regions. If they hit the torus wall that confines the plasma, it can vaporize. The figure 2 shows movement of the blobs over time. A key challenge to the analysis is the lack of a precise definition for these structures. We have used multiple techniques to identify and track the blobs over time. The top row is the original image after removing camera noise. Second row is after removal of ambient or background intensity, which is approximated by the median of the sequence. In the third row, we use image processing techniques to identify and track the blobs over time. The goal is validate and refine the theory of plasma turbulence.

3. Scientific Data Indexing used for Identifying Malicious Network Attacks

As the volume of data grows, there is an urgent need for efficient searching and filtering of large-scale scientific multivariate datasets with hundreds of searchable attributes. FastBit is an extremely efficient bitmap indexing technology, developed under the Base Research program and used by the SDM center. It uses a CPU-friendly bitmap compression technique which is especially suitable for numeric scientific data. FastBit performs 12 times faster than any known compressed bitmap index in answering range queries. Because of its speed, Fastbit facilitates real-time analysis of data, searching over billions of data values in seconds. FastBit has been applied to several application domains, including finding flame fronts in combustion data, searching for rare events from billion of high energy physics collision events, and more recently to facilitate query-based visualization. The figure 3 below shows a 3D histogram over the IP address space and time, to identify malicious network traffic attacks. The query-driven visualization reveals consecutive regions that represent coordinated attacks. It was obtained in real time by using FastBit.

Figure3. Identifying network attacks using FasBit indexing

For more information, click this link http://sdmcenter.lbl.gov

High Performance, Petascale Visualization and Analytics FY07 Accomplishments

1. New capability -- Query-Driven Visualization and analytics. One of the primary long-term challenges in scientific research is to perform knowledge discovery and hypothesis testing on datasets of growing size and complexity produced by simulation and experiment. We have performed research aimed at producing a new breed of technology called "Query-Driven Visualization and Analytics" that combines visualization, analytics, data mining and scientific data management technologies. This work, which spans multiple years of research effort, has proven to be successful in several key regards: (1) for fundamental visualization algorithms like isocontouring, it performs faster than the fastest known isosurface implementation; (2) it was proven to reduce the knowledge discovery "duty cycle" (e.g., time-to-answer) from days to seconds when applied to a massive network traffic database and used to discover and characterize a complex, distributed and coordinated network attack. Based upon the success of these accomplishments, these ideas are being adapted for use on general science problems and datasets and integrated for use in production-quality, petascale capable software infrastructure to be deployed at DOE's open computing facilities.

2. New capability - remote delivery of scientific visualization results generated at central computing facilities. Among the many barriers to efficient use of centrally located computing facilities is the inability to effectively visually navigate through large and complex datasets. This result introduces a new technique that enables such navigation by overcoming the effects of network latency and fixed image resolution, and is sufficiently general purpose to be used in conjunction with results produced by nearly any scientific visualization application. Such technologies lower the barrier to use of advanced computing and analysis technologies needed to tackle contemporary science challenges. This technology has the potential to impact virtually all data intensive sciences that rely on some form of visual data analysis.

3. New capability - combining visual data analysis with quantitative data analysis to accelerate scientific discovery. There are two primary modes of scientific discovery in data-intensive activities: (1) confirming the presence or absence of a feature; and (2) discovering something completely unexpected. Our new work takes aim at providing the means to discover completely unexpected phenomena with large and complex datasets. Our approach uses a novel combination of visual and quantitative analysis technologies to help a researcher discover correlations and relationships hidden in large, complex, multivariate and time varying datasets. This early work has been successfully applied to computational biology to aid in understanding gene expression data and combustion modeling to aid in understanding the fundamental processes that comprise combustion.